What we're talking about here is "Governance, Risk, & Compliance" (GRC) as a discipline, not the product niche that seems to be the favorite catch-all for startups these days. Simply buying a license for IT UCF and throwing a UI on it does not a GRC product make, and it certainly does not address the overall discipline of GRC, which is fundamental to the successful management of organization and the risk contained therein. All organizations have a governance structure, but they're not universally integrating security and risk management practices into those structures. Moreover, compliance (aka "checkbox security") has taken far too prevalent of a roll in organizations today, rather than being a component of the overall governance and risk management strategies.

Approaching GRC as a disciple, it appears that there are five (5) main areas where organizations should invest time, energy, and resources:

1) Survivability Strategy & Legal Defensibility

First and foremost, existing governance needs to be bolstered by a change in direction and strategy. Instead of the traditional approach of building levies, it's instead imperative that executives shift to a survivability strategy that focuses on how to break in manageable ways while continuing operations and minimizing losses and disruption. At the same time, this strategy should leverage legal defensibility as part of the risk management approach to ensure that decisions are a) sound, b) documented, and c) represent meaningful and measurable change for the organization.

2) Formalized Methods

In addition to heading change on overall strategy, it is also vital to recognize the role and importance of leveraging formalized methods in providing a solid, improvable, repeatable basis for decisions. Adopting formal risk assessment, analysis, and management methods as part of an overall decision analysis and management approach will improve decision qualities while also meeting legal defensibility requirements.

Application security initiatives should be captured as integrated methods within an overall formalized software development methodology (even if an agile/rapid-style process is in use). Building security in is far better than bolting it on after the fact, and it will reduce long-term maintenance costs as well. Moreover, putting appsec tools (with appropriate training) into the hands of developers and QA testers will reduce the overall time investment necessary to ruggedize development processes and outputs.

Traditional audit and testing should be eschewed in favor of integrated and white-box methods that allow for thorough, informed assessments. While it's useful to have external "black box" style tests performed, they bring with them limited visibility that results in only partial coverage of code, whereas giving testers full access to the codebase in addition to integrating appsec tools into the dev process can provide much more thorough and complete coverage.

Finally, a premium should be placed on visibility and metrics. If you can't see into a codebase or system, then you're increasing your exposure through simple lack of informed awareness. Instead, transparency (from an internal perspective) is important, and will then allow for the development of useful measurements that can be tracked through a realistic metrics program. Developing quality metrics will help the GRC program track organizational performance and maturity, which can in turn be used to guide security investments, as well as to justify the effectiveness and benefit of investments.

3) Policies 2.0

We've all seen them: 100-page "policy" documents, for which users are responsible to know and understand some or all. How much the average person needs to know is probably unknown, and yet we treat them as hallowed tomes of wisdom. Unfortunately, nothing could be further from the truth. The simple fact is that most policies are of at least secondary importance to the business, and most people don't even need to know about them. Why, then, do we inflict these control regimes on entire organizations?

Instead of focusing on policies, it's far more useful and effective to look at practices and processes. What are people doing on a daily basis? Are they following repeatable processes? If you can't define and describe what it is that every employee does on a daily basis, then I submit that you have a bit of a problem.

The next generation of policies should leverage a few attributes:

* Lightweight: The security policies themselves should be as minimal as possible. They should define the overall structure policy and control framework structure(s), setting forth the raw requirements to which the business is subject or subjecting itself (e.g. regulatory requirements [like PCI DSS], certification regimes [like ISO 27000], roles and responsibilities). At most, we're talking 10-20 formatted pages. Note that you don't articulate the detailed requirements at this level, but provide the overall governance structure that will point into specific in-practice implementations (e.g., standards, guidelines, processes).

* Prioritized by Risk: The top-level policies should set forth a risk-based prioritization scheme. Not all requirements are created equal. It should thus be self-evident how such a determination can be made. The average user should know either how to correctly self-assess requirement priority, or at least be able to make an informed request that is answered in a timely fashion. Responsibility for making good decisions must be put squarely on the shoulders of those making the decisions, and sanctioning regimes should be linked-in at this level to let people know that not following the rules, or simply making bad decisions in violation of the rules, will have consequences with negative impacts. At the same time, users should be empowered to make legally defensible decisions that say "The cost of confirming with Requirement X are not acceptable, but we need to move forward with taking this action anyway." For example, spending $1m to meet a policy requirement in a $0.5m project does not make sense, especially if that requirement does not represent a appreciable level of risk for the organization.

* Process-Oriented: Or, simply put: practical and pragmatic. If you can't put every single requirement into practice, then it shouldn't be a requirement, plain and simple. More importantly, while it may increase the burden on your compliance-management people, it is far more worthwhile to embed requirements into existing (or new) processes, rather than to maintain them in a tome on a shelf that will never be followed. Consider the PCI DSS requirement for addressing the OWASP Top 10 in applicable applications. If you integrate testing for those weaknesses into the development process using a "build security in" approach, then you'll know that those issues are being proactive addressed. This approach is far preferable to trying to catch these weaknesses down the pipeline using pre-release security testing (which is not to say you shouldn't have pre-launch testing, but that it shouldn't be focused on the minimal requirements so much as on value-add testing).

4) HPG-based ET&A

Education, training, and awareness activities have historically been rather ineffective. Sure, you can show some short-term results where ET&A causes a down-tick in bad things happening, but overall we still have the same problems today as we did 15 years ago. Ultimately, these problem track back to one key finding: the human paradox gap was not narrowed.

The human paradox gap (HPG) is a phrase leveraged by Michael Santarcangelo in his book, Into the Breach, in which he talks about the fundamental disconnect between users' decisions+actions and the resulting consequences+impacts. That is, people generally do not feel the pain of their bad decisions, nor do they generally receive positive feedback for their good decisions (all within an infosec/IT context). To make matters worse, infosec traditionally has taken a very stick-heavy approach to this problem, flogging users for being "stupid" (e.g. ID-10-T and EBCAK errors), when at the same time all that infosec has done is enabled bad behavior and poor decisions by taking overall responsibility out of users' hands while leaving the actual decision authority in their hands.

Going forward, GRC programs should focus on how to narrow the HPG. Instead of focusing on annual CBT-based training that has become mind-numbing, repetitive, and easy to tune out, GRC programs should instead work with people on an ongoing basis, throughout the year, identifying both positive and negative impacts and directly linking people to those impacts. Additionally, users need to have security responsibilities added to their job descriptions, and have their performance measured against a handful of useful security measures. Furthermore, once users have been brought up-to-speed on these programs and completed an adjustment period, they then must be held accountable for poor security performance, just as they would be for poor performance around their normal job functions.

Cultural change will only come through changing people. Security teams cannot be held responsible for the decisions of non-security people. Why do we continue to enable these bad decisions and take the fall on their behalf? It's time to say "no" to these practices and put the responsibility back where it belongs: onto the users making bad decisions.

5) Audit & Quality: Beyond Checkboxes

Too much of the focus in GRC to date has been on checkbox security and compliance. The way most vendors talk, you'd think GRC was really CAISMGRT ("Compliance, Audit, and, I Suppose, Maybe Governance and Risk, Too"). Compliance is not security, it's not governance, and it's not even really audit. Governance is your overall umbrella structure for managing an organization, which has decision analysis and management as a key component. "Risk" in this context means risk assessment, analysis, and management. It deals with a specific component of decision analysis, and should be driven by a desired survivability objective, and underpinned by sound legal defensibility approach. Compliance, really, is just an ancillary piece that supports the G and R. Really, it's just one piece of Governance and Risk, buttressed through various audit activities.

Unfortunately, these audit activities have become worse and less effective over time. One need only look at the number of breaches of PCI DSS "compliant" organizations to realize that "compliance" has its limits. Part of the problem is in focusing almost exclusively on compliance, rather than GRC as an overall, comprehensive program. Another part of the problem is in how audits are performed, and how quality may or may not be measured.

For an excellent, amusing, and short (20-minute) webinar on audit, check out IT UCF's webinar "Creating Audit Questions." This webinar highlights very clearly what is needed in performing audits. It's not adequate to simply focus on yes/no questions in performing an audit. Instead, it's rather important to take a holistic approach, getting beyond whether or not a widget is in the right configuration, and looking at the widget's overall place in the world, and how its placement may or may not be in keeping with the spirit (purpose) of it's respective regulatory provenance.

In addition to revamping the audit complex, it's also necessary to look at adding or improving an overall quality and performance management apparatus, of which audit is merely one part. This apparatus should have responsibilities for routine assessments (beyond basic audits), as well as for defining, maintaining, tracking, and trend-analyzing key metrics. Metrics is, of course, a hot topic today in infosec, though one without much good open source material or obvious answers. Nonetheless, despite the lack of open source solutions, that does not mean that organizations cannot (or have not) define their own reasonable metrics to track program effectiveness. From the perspectives of fiscal responsibility and legal defensibility, I submit that high quality metrics are very important to the success and survival of your GRC program, and something that should be taken very seriously. Moreover, these schemas should be supported by people with a sound mathematical background that includes an understanding of sampling, statistics, and modeling (ymmv depending on org size - however, I think there's an opportunity here for vendors and niche consultancies).

GRC or GROC?

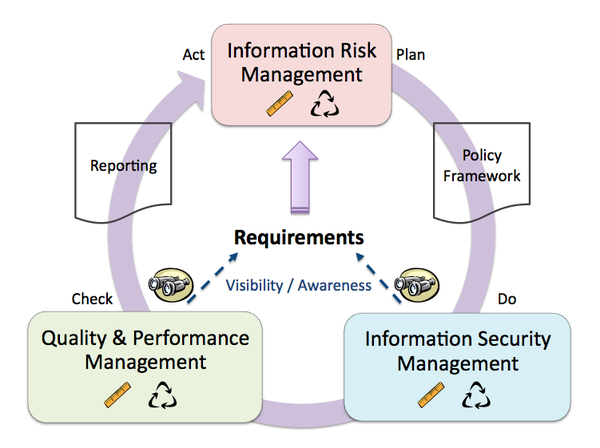

Lastly, I'm reminded of the TEAM Model that I developed for my masters thesis back in 2006 (depicted below in its v2 state). If you look at the model, one of the things missing from GRC is operations. In fact, I have to wonder if part of the reason we don't grok GRC is because it should be GROC, or maybe just GRO. Through all the descriptions above, you'll note that it is all consistent with the TEAM Model, with very little variation.

Mapping this diagram to GRC, I would argue that Governance encapsulates the entire model, whereas Risk obviously maps to the Information Risk Management competency, and Compliance maps as a sub-component of Quality & Performance Management. I would submit that the next generation of GRC should, in fact, map fully to the TEAM Model (but then, of course, I'm a bit biased). Nonetheless, I find the model to be instructive and useful as a reference point for topics like GRC as a discipline, and hope that you'll agree, too.